Since A/B testing is the main tool in the conversion optimization toolbox, you've got to master it to be successful. This is the simple success formula for mastering A/B testing.

Share on facebook

Share on twitter

Share on linkedin

Share on facebook

Share on twitter

Share on linkedin

This is the sixth in a series of articles on growth hacking, which I'm going deep into with a minidegree in Growth Marketing from the CXL Institute. Read more: What is growth hacking? | How to run a successful growth marketing experiment in six steps | How to identify the best channels for your next growth marketing campaign | What the FUD? | How to test the health of your Google Analytics

***

When it comes to conversion optimization, there's no tool as powerful and useful as the A/B test. Since A/B testing is the main tool in the conversion optimization toolbox, you've got to master it to be successful.

These tests are how are you evaluate the validity of your growth marketing hypotheses. You take your current page, and then you put it next to a challenger page that has something different, such as a missing element, new copy or different information architecture.

And then you run the test for a defined period of time, anywhere from a week to a month, and see if the challenger version can improve conversions on a specific goal or event when compared to the defauly.

As always, that's easier said than done.

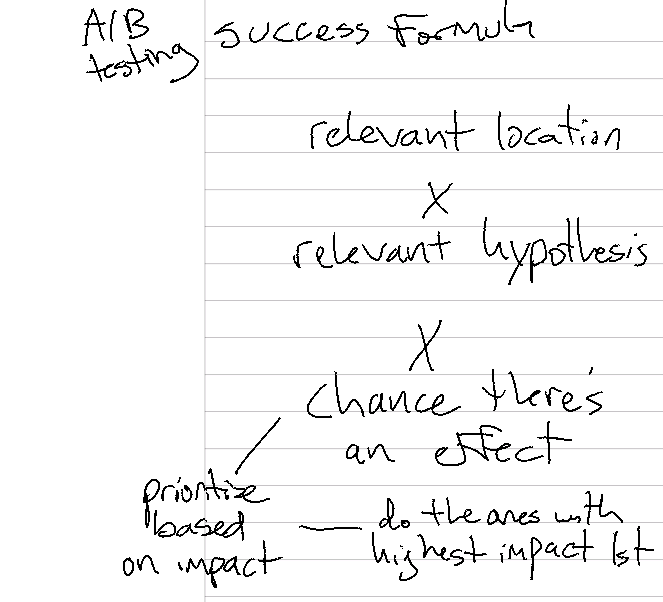

Ton from Online Dialogue cuts through the noise and offers up a simple success formula for mastering A/B testing. Here's my note:

The beauty of the success formula is that it is self-explanatory. It's simple and straightforward. It's also a helpful guide and reminder to keep you on track when prioritizing the order of your A/B tests.

It's about doing the right research, coming up with the right hypothesis, testing it in the right location and then prioritize based on potential effect. Do the ones first that will make you the biggest impacts.

Ton @ Online Dialogue

I'll explain each of these real quick below so you have complete clarity on what needs to be done when prioritizing your A/B testing.

Relevant location

The first part of the formula is having a relevant location. What this means is prioritizing pages that both align strongly with your hypothesis and have the greatest potential for impacting core business metrics. These metrics will vary but always related to conversion of some sort of event: a completed transaction, an email sign up or moving down to the next stage of the final.

Ultra often, a great hypothesis with strong potential effect fails because of the tests being run at the wrong location. This is doubly unfortunate, because a location-based failure can lead to wrong assumptions about the relevancy of the hypothesis and its potential effect.

Think carefully about each test: Is this the right place to test this specific hypothesis? And is this the right product that we want to optimize for our specific focus KPIs?

Don't fall in the trap of just now focusing on the specific templates. Instead, focus on the products you want to optimize with the right KPI and have the hypothesis that are backed up by research.

Ton @ Online Dialogue

Relevant hypothesis

The A/B testing success formula Also requires a relevant hypothesis. This hypothesis should be based on extensive customer research, as well as a curiosity and understanding of broader consumer psychology.

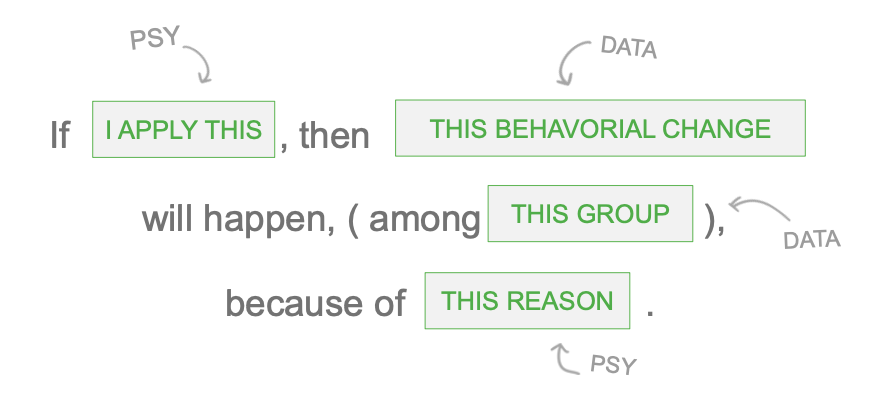

As a refresher, this is what a good hypothesis looks like:

You can and should have many hypotheses. That's a good sign! It means you did the proper customer research before implementing your tests.

User research, which is part of running a growth marketing experiment, should be based on extensive conversations with customers and colleagues. You want to learn as much as you can about user behavior, user challenges, and user fears, uncertainties and doubts.

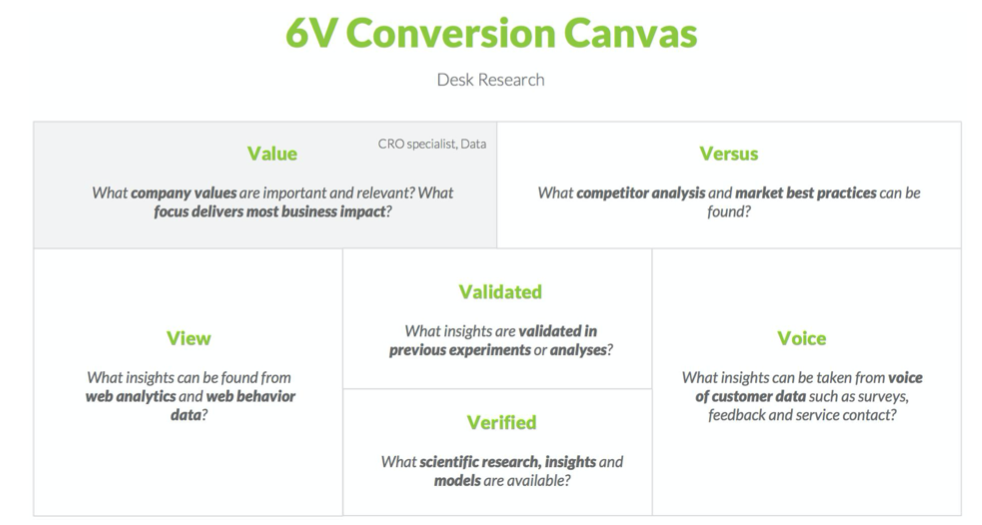

If you're uncertain how to build a long list of relevant hypotheses, turn back to your research using the 6V Conversion Canvas. Each of these areas are rich with insights waiting to be uncovered. When going through this extensive research process, you should find no shortage of potential hypotheses.

Once we have brainstormed a good number of hypotheses, then you can start matching hypotheses with specific locations for testing. And then choosing which ones to start with based on potential effect.

Potential effect

Last but not least, you want to consider the potential effect of each of your tests. It may be stating the obvious...but you don't want to waste time on experiments that have low impact.

But success formula requires that you start with those location/hypothesis combinations that have the highest potential for moving core business metrics. Of course, the most important metrics can and will shift depending on the season, business needs and all that. Most often, these metrics are directly related to revenue.

However, there are plenty of ways to optimize for potential effect that aren't directly correlated to revenue. For example, if you find a major drop off early on in the checkout phase, optimizing that page may trickle down and have a major impact on revenue.

Creating your "experiments roadmap"

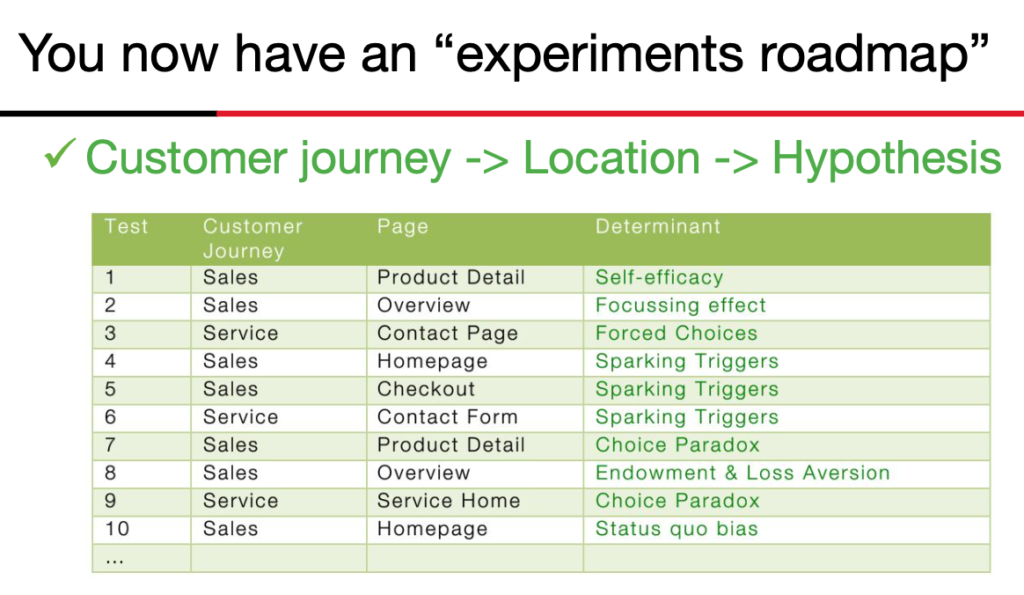

Once you have these three components -- location, relevant hypothesis, and high potential effect -- you can build out your experiments roadmap. This is a list of all the tasks that you want to run. Your roadmap gives you an overview of everything you want to accomplish.

As you can see below, each test has location, a hypothesis based on a psychological determinant, and a stage of the customer journey.

Next, so use that roadmap to prioritize first based on Minimum Desired Effect and then, once experiments are running, based on actual measured results. More on that in my next post! In the meantime, check out this great tool for prioritizing your A/B experiments.